Creating S3 repository for elasticsearch using minio as the s3 object storage backend

Please note that this should not be used in production, this is a lab environment to provide guidance for configuration.

With many kubernetes environments you can have the cloud provided object storage that can be configured with ECK and used as your snapshot repositories, this guide is more for 3rd party object storage that you might have if you are not on cloud and for modifying existing deployments to configure new snapshot repositories.

I will perform the steps in GKE environment onto the default namespace

Install minio

Configure SSL

Configure a set of certs to be used with minio deployment

$ openssl req -x509 -sha256 -nodes -newkey rsa:4096 -days 365 -subj "/CN=minio-service" -addext "subjectAltName=DNS:minio-service.default.svc.cluster.local" -keyout private.key -out public.crt

..........+..+....+........+..........+...+........+.+......+++++++++++++++++++++++++++++++++++++++++++++*.+..+...+....+.........+..............+.+.....+....+..+.+.........+...........+..........+...+.........+........+....+......+.....+..........+..+.............+........+....+..+...................+...............+..+.........+...+..........+...+++++++++++++++++++++++++++++++++++++++++++++*..+....+........+.........+.+...+....................+.+......+..+............+.........+....+......+...........+++++

..+....+.....+.......+.....+.+..+.......+......+...+......+...........+....+.....+++++++++++++++++++++++++++++++++++++++++++++*....+++++++++++++++++++++++++++++++++++++++++++++*....+....+.....+....+.....+.+.....+.........+....+..+.........+....+......+.........+...+..+............+...+.......+........+...+............+.+......+...............+.....+......+...+............+..........+...+..+....+...+......+......+.........+.....+................+......+.................+............+...............+.............+..+..................+...+............+......+..........+......+++++

-----

$ ls -1

private.key

public.crtLets create the secret

$ kubectl create secret generic minio-service-secret \

--from-file=./public.crt \

--from-file=./private.key

secret/minio-service-secret createdInstall minio as standalone deployment

We will install minio in a single pod deployment as outlined on https://github.com/kubernetes/examples/tree/master/staging/storage/minio#minio-standalone-server-deployment

Create the pvc

By default the pvc created via https://raw.githubusercontent.com/kubernetes/examples/master/staging/storage/minio/minio-standalone-pvc.yaml will be 10GB. If you need to change the size you can edit it before creating it.

$ kubectl create -f https://raw.githubusercontent.com/kubernetes/examples/master/staging/storage/minio/minio-standalone-pvc.yaml

persistentvolumeclaim/minio-pv-claim created

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

minio-pv-claim Bound pvc-9709f953-2a75-4984-ad8c-26d21c578b79 10Gi RWO standard 64mCreate the deployment

minio will run on port 9000 and the default client key will be minio and the default secret key will be minio123

We will edit the minio-standalone-deployment.yaml to use the certificate and to enable https

$ cat minio-standalone-deployment.yaml

apiVersion: apps/v1 # for k8s versions before 1.9.0 use apps/v1beta2 and before 1.8.0 use extensions/v1beta1

kind: Deployment

metadata:

# This name uniquely identifies the Deployment

name: minio-deployment

spec:

selector:

matchLabels:

app: minio

strategy:

type: Recreate

template:

metadata:

labels:

# Label is used as selector in the service.

app: minio

spec:

# Refer to the PVC created earlier

volumes:

- name: storage

persistentVolumeClaim:

# Name of the PVC created earlier

claimName: minio-pv-claim

- name: minio-certs

secret:

secretName: minio-service-secret

items:

- key: public.crt

path: public.crt

- key: private.key

path: private.key

- key: public.crt

path: CAs/public.crt

containers:

- name: minio

# Pulls the default Minio image from Docker Hub

image: minio/minio:latest

args:

- server

- /storage

env:

# Minio access key and secret key

- name: MINIO_ACCESS_KEY

value: "minio"

- name: MINIO_SECRET_KEY

value: "minio123"

ports:

- containerPort: 9000

hostPort: 9000

# Mount the volume into the pod

volumeMounts:

- name: storage # must match the volume name, above

mountPath: "/storage"

- name: minio-certs

mountPath: "/root/.minio/certs"

$ kubectl create -f minio-standalone-deployment.yaml

deployment.apps/minio-deployment created

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

minio-deployment 1/1 1 1 26s

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

minio-deployment-d86975f6f-2qlrs 1/1 Running 0 65m

$ kubectl logs -f minio-deployment-d86975f6f-fwrbp

WARNING: MINIO_ACCESS_KEY and MINIO_SECRET_KEY are deprecated.

Please use MINIO_ROOT_USER and MINIO_ROOT_PASSWORD

Formatting 1st pool, 1 set(s), 1 drives per set.

WARNING: Host local has more than 0 drives of set. A host failure will result in data becoming unavailable.

MinIO Object Storage Server

Copyright: 2015-2024 MinIO, Inc.

License: GNU AGPLv3 - https://www.gnu.org/licenses/agpl-3.0.html

Version: RELEASE.2024-07-10T18-41-49Z (go1.22.5 linux/amd64)

API: https://10.21.129.21:9000 https://127.0.0.1:9000

WebUI: https://10.21.129.21:41657 https://127.0.0.1:41657

Docs: https://min.io/docs/minio/linux/index.html

Status: 1 Online, 0 Offline.

STARTUP WARNINGS:

- The standard parity is set to 0. This can lead to data loss.Create the service

The default service creates a LoadBalancer endpoint to expose the service on the net, however we do not need this since this is a lab and we will only need to access it from the ClusterIP network.

Edit minio-standalone-service.yaml to remove type: LoadBalancer then apply

$ cat minio-standalone-service.yml

apiVersion: v1

kind: Service

metadata:

name: minio-service

spec:

ports:

- name: https

port: 9000

targetPort: 9000

protocol: TCP

selector:

app: minio # must match with the label used in minio deployment

$ kubectl apply -f minio-standalone-service.yaml

service/minio-service created

$ kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

minio-deployment-d86975f6f-2qlrs 1/1 Running 0 65m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.53.112.1 <none> 443/TCP 3h39m

minio-service ClusterIP 10.53.114.100 <none> 9000/TCP 26sConnect to minio server using minio client(mc)

We will run a minio client in the environment so that we can send commands to our minio server. We will configure the CA so that mc can communicate with minio server with TLS.

create a manifest for mc

- we will re-use the

minio-service-secretto provide the CA

$ cat minio-client.yaml

apiVersion: v1

kind: Pod

metadata:

name: minio-client

spec:

containers:

- command:

- /bin/sh

- -c

- while true; do sleep 5s; done

image: minio/mc

imagePullPolicy: Always

name: minio

volumeMounts:

- name: minio-certs

mountPath: "/root/.mc/certs"

volumes:

- name: minio-certs

secret:

secretName: minio-service-secret

items:

- key: public.crt

path: public.crt

- key: private.key

path: private.key

- key: public.crt

path: CAs/public.crt$ kubectl apply -f minio-client.yaml

pod/minio-client createdUse mc to create buckets

Now to configure our minio client

First we need to get the name of the service

$ kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.53.112.1 <none> 443/TCP 4h8m

minio-service ClusterIP 10.53.114.100 <none> 9000/TCP 46mWe see that the service is named minio-service

$ kubectl exec --stdin --tty minio-client -- mc alias set minio https://minio-service.default.svc.cluster.local:9000 minio minio123

mc: Configuration written to `/root/.mc/config.json`. Please update your access credentials.

mc: Successfully created `/root/.mc/share`.

mc: Initialized share uploads `/root/.mc/share/uploads.json` file.

mc: Initialized share downloads `/root/.mc/share/downloads.json` file.

Added `minio` successfully.

$ kubectl exec --stdin --tty minio-client -- mc ls minio

$Lets create 2 buckets named default and default2

$ kubectl exec --stdin --tty minio-client -- mc ls

$ kubectl exec --stdin --tty minio-client -- mc mb minio/default

Bucket created successfully `minio/default`.

$ kubectl exec --stdin --tty minio-client -- mc mb minio/default2

Bucket created successfully `minio/default2`.

$ kubectl exec --stdin --tty minio-client -- mc ls minio

[2024-07-10 22:09:59 UTC] 0B default/

[2024-07-10 22:10:03 UTC] 0B default2/We are done with minio setup.

We could have port forwarded and used the web client to create buckets but I wanted to show the minio client on this demo. elasticsearch will work with http minio server however I wanted to add the SSL on top just because. You can omit the SSL configs and change everything to http and it will still work with elasticsearch

Install elasticsearch

We will install elasticsearch and kibana with the ECK operator. I will use my script https://www.gooksu.com/2022/09/new-elastic-kubernetes-script-deploy-elastick8s-sh/ to deploy the stack.

- plugin for s3 repository is no longer needed and is included with elasticsearch

Install a basic stack

We will install elasticsearch & kibana 8.14.2 via my script using ECK operator 2.13.0

$ ./deploy-elastick8s.sh stack 8.14.1 2.13.0

[DEBUG] jq found

[DEBUG] docker found & running

[DEBUG] kubectl found

[DEBUG] openssl found

[DEBUG] container image docker.elastic.co/elasticsearch/elasticsearch:8.14.1 is valid

[DEBUG] ECK 2.13.0 version validated.

[DEBUG] This might take a while. In another window you can watch -n2 kubectl get all or kubectl get events -w to watch the stack being stood up

********** Deploying ECK 2.13.0 OPERATOR **************

[DEBUG] ECK 2.13.0 downloading crds: crds.yaml

[DEBUG] ECK 2.13.0 downloading operator: operator.yaml

⠏ [DEBUG] Checking on the operator to become ready. If this does not finish in ~5 minutes something is wrong

NAME READY STATUS RESTARTS AGE

pod/elastic-operator-0 1/1 Running 0 4s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/elastic-webhook-server ClusterIP 10.53.115.167 <none> 443/TCP 4s

NAME READY AGE

statefulset.apps/elastic-operator 1/1 4s

[DEBUG] ECK 2.13.0 Creating license.yaml

[DEBUG] ECK 2.13.0 Applying trial license

********** Deploying ECK 2.13.0 STACK 8.14.1 CLUSTER eck-lab **************

[DEBUG] ECK 2.13.0 STACK 8.14.1 CLUSTER eck-lab Creating elasticsearch.yaml

[DEBUG] ECK 2.13.0 STACK 8.14.1 CLUSTER eck-lab Starting elasticsearch cluster.

⠏ [DEBUG] Checking to ensure all readyReplicas(3) are ready for eck-lab-es-default. IF this does not finish in ~5 minutes something is wrong

NAME READY AGE

eck-lab-es-default 3/3 87s

[DEBUG] ECK 2.13.0 STACK 8.14.1 CLUSTER eck-lab Creating kibana.yaml

[DEBUG] ECK 2.13.0 STACK 8.14.1 CLUSTER eck-lab Starting kibana.

⠏ [DEBUG] Checking to ensure all readyReplicas(1) are ready for eck-lab-kb. IF this does not finish in ~5 minutes something is wrong

NAME READY UP-TO-DATE AVAILABLE AGE

eck-lab-kb 1/1 1 1 37s

[DEBUG] Grabbed elastic password for eck-lab: 80zMWFOt0nJ3844zK95i2AHs

[DEBUG] Grabbed elasticsearch endpoint for eck-lab: https://34.122.102.218:9200

[DEBUG] Grabbed kibana endpoint for eck-lab: https://34.70.50.232:5601

[SUMMARY] ECK 2.13.0 STACK 8.14.1

NAME READY STATUS RESTARTS AGE

pod/eck-lab-es-default-0 1/1 Running 0 2m9s

pod/eck-lab-es-default-1 1/1 Running 0 2m8s

pod/eck-lab-es-default-2 1/1 Running 0 2m8s

pod/eck-lab-kb-67845cfd76-vk8mq 0/1 Running 0 12s

pod/eck-lab-kb-6df5d89fff-mnqkg 1/1 Running 0 40s

pod/minio-client 1/1 Running 0 47m

pod/minio-deployment-d86975f6f-fwrbp 1/1 Running 0 104m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/eck-lab-es-default ClusterIP None <none> 9200/TCP 2m10s

service/eck-lab-es-http LoadBalancer 10.53.113.110 34.122.102.218 9200:31482/TCP 2m11s

service/eck-lab-es-internal-http ClusterIP 10.53.113.16 <none> 9200/TCP 2m11s

service/eck-lab-es-transport ClusterIP None <none> 9300/TCP 2m11s

service/eck-lab-kb-http LoadBalancer 10.53.112.217 34.70.50.232 5601:32450/TCP 42s

service/kubernetes ClusterIP 10.53.112.1 <none> 443/TCP 5h15m

service/minio-service ClusterIP 10.53.114.100 <none> 9000/TCP 96m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/eck-lab-kb 1/1 1 1 42s

deployment.apps/minio-deployment 1/1 1 1 104m

NAME DESIRED CURRENT READY AGE

replicaset.apps/eck-lab-kb-67845cfd76 1 1 0 13s

replicaset.apps/eck-lab-kb-6df5d89fff 1 1 1 41s

replicaset.apps/minio-deployment-d86975f6f 1 1 1 104m

NAME READY AGE

statefulset.apps/eck-lab-es-default 3/3 2m10s

[SUMMARY] STACK INFO:

eck-lab elastic password: 80zMWFOt0nJ3844zK95i2AHs

eck-lab elasticsearch endpoint: https://34.122.102.218:9200

eck-lab kibana endpoint: https://34.70.50.232:5601

[SUMMARY] ca.crt is located in /Users/jlim/elastick8s/ca.crt

[NOTE] If you missed the summary its also in /Users/jlim/elastick8s/notes

[NOTE] You can start logging into kibana but please give things few minutes for proper startup and letting components settle down.$ curl -k -u "elastic:80zMWFOt0nJ3844zK95i2AHs" https://34.122.102.218:9200

{

"name" : "eck-lab-es-default-2",

"cluster_name" : "eck-lab",

"cluster_uuid" : "1PmLruW0RamUM5HvEiN5qw",

"version" : {

"number" : "8.14.1",

"build_flavor" : "default",

"build_type" : "docker",

"build_hash" : "93a57a1a76f556d8aee6a90d1a95b06187501310",

"build_date" : "2024-06-10T23:35:17.114581191Z",

"build_snapshot" : false,

"lucene_version" : "9.10.0",

"minimum_wire_compatibility_version" : "7.17.0",

"minimum_index_compatibility_version" : "7.0.0"

},

"tagline" : "You Know, for Search"

}

Create secrets for keystores

To create secrets that will be stored in the keystore for elasticsearch we will follow https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-es-secure-settings.html

$ kubectl create secret generic default-secrets \

--from-literal=s3.client.default.access_key=minio \

--from-literal=s3.client.default.secret_key=minio123

secret/default-secrets created

$ kubectl create secret generic default2-secrets \

--from-literal=s3.client.default2.access_key=minio \

--from-literal=s3.client.default2.secret_key=minio123

secret/default2-secrets created

$ kubectl get secrets | grep default

default-secrets Opaque 2 62s

default2-secrets Opaque 2 52s

$ kubectl get secrets default-secrets -o yaml

apiVersion: v1

data:

s3.client.default.access_key: bWluaW8=

s3.client.default.secret_key: bWluaW8xMjM=

kind: Secret

metadata:

creationTimestamp: "2024-07-10T23:02:38Z"

name: default-secrets

namespace: default

resourceVersion: "204387"

uid: 7fa303d4-ced6-472a-9ebc-38ee6e322305

type: OpaqueCreate secrets for custom-truststore for the minio SSL endpoint

Extract the cacerts from JVM truststore .

$ kubectl cp eck-lab-es-default-0:/usr/share/elasticsearch/jdk/lib/security/cacerts cacerts

Defaulted container "elasticsearch" out of: elasticsearch, elastic-internal-init-filesystem (init), elastic-internal-suspend (init), sysctl (init)

tar: Removing leading `/' from member names

$ ls -1

cacerts

private.key

public.crtadd the certificate to the JVM truststore

$ keytool -importcert -keystore cacerts -storepass changeit -file public.crt -alias custom-s3-svc

Owner: CN=minio-service

Issuer: CN=minio-service

Serial number: 1166b223ed7a5e5b64d751d95e2d40929d308e12

Valid from: Wed Jul 10 20:48:53 UTC 2024 until: Thu Jul 10 20:48:53 UTC 2025

Certificate fingerprints:

SHA1: AB:76:F7:D9:A1:46:5F:6D:FC:FD:63:2B:01:93:AC:D3:22:50:0F:C5

SHA256: 38:0B:42:5C:F8:B5:96:69:40:89:6C:BA:3D:B3:03:36:9F:23:66:DB:78:27:6D:D8:03:91:35:CB:D3:A1:03:18

Signature algorithm name: SHA256withRSA

Subject Public Key Algorithm: 4096-bit RSA key

Version: 3

Extensions:

#1: ObjectId: 2.5.29.35 Criticality=false

AuthorityKeyIdentifier [

KeyIdentifier [

0000: 00 0A 6F 85 C8 A7 2C 7C 50 FF 66 32 33 B5 C0 16 ..o...,.P.f23...

0010: A4 51 9E BD .Q..

]

]

#2: ObjectId: 2.5.29.19 Criticality=true

BasicConstraints:[

CA:true

PathLen: no limit

]

#3: ObjectId: 2.5.29.17 Criticality=false

SubjectAlternativeName [

DNSName: minio-service.default.svc.cluster.local

]

#4: ObjectId: 2.5.29.14 Criticality=false

SubjectKeyIdentifier [

KeyIdentifier [

0000: 00 0A 6F 85 C8 A7 2C 7C 50 FF 66 32 33 B5 C0 16 ..o...,.P.f23...

0010: A4 51 9E BD .Q..

]

]

Trust this certificate? [no]: yes

Certificate was added to keystore

$ kubectl create secret generic custom-truststore --from-file=cacerts

secret/custom-truststore createdEdit the elasticsearch kind to include the secure settings and the custom truststore

You can edit it on the fly or if you’ve used deploy-elastick8s.sh then you should have ~/elastick8s/elasticsearch-eck-lab.yaml that you can edit and apply

$ kubectl edit elasticsearch eck-labAfter editing it should look similar to

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: eck-lab

spec:

secureSettings:

- secretName: default-secrets

- secretName: default2-secrets

version: 8.14.1

nodeSets:

- name: default

config:

node.roles: ["master", "data", "ingest", "ml", "remote_cluster_client", "transform"]

xpack.security.authc.api_key.enabled: true

podTemplate:

metadata:

labels:

scrape: es

spec:

volumes:

- name: custom-truststore

secret:

secretName: custom-truststore

containers:

- name: elasticsearch

resources:

requests:

memory: 2Gi

cpu: 1

limits:

memory: 2Gi

cpu: 1

volumeMounts:

- name: custom-truststore

mountPath: /usr/share/elasticsearch/config/custom-truststore

env:

- name: ES_JAVA_OPTS

value: "-Djavax.net.ssl.trustStore=/usr/share/elasticsearch/config/custom-truststore/cacerts -Djavax.net.ssl.keyStorePassword=changeit"

initContainers:

- name: sysctl

securityContext:

privileged: true

runAsUser: 0

command: ['sh', '-c', 'sysctl -w vm.max_map_count=262144']

count: 3

http:

service:

spec:

type: LoadBalancerOnce the change is saved the deployment should go into a rolling restart

Verify that the keystore items are in place

$ kubectl exec -it eck-lab-es-default-2 -- bash

Defaulted container "elasticsearch" out of: elasticsearch, elastic-internal-init-filesystem (init), elastic-internal-init-keystore (init), elastic-internal-suspend (init), sysctl (init)

elasticsearch@eck-lab-es-default-2:~$ bin/elasticsearch-keystore list

keystore.seed

s3.client.default.access_key

s3.client.default.secret_key

s3.client.default2.access_key

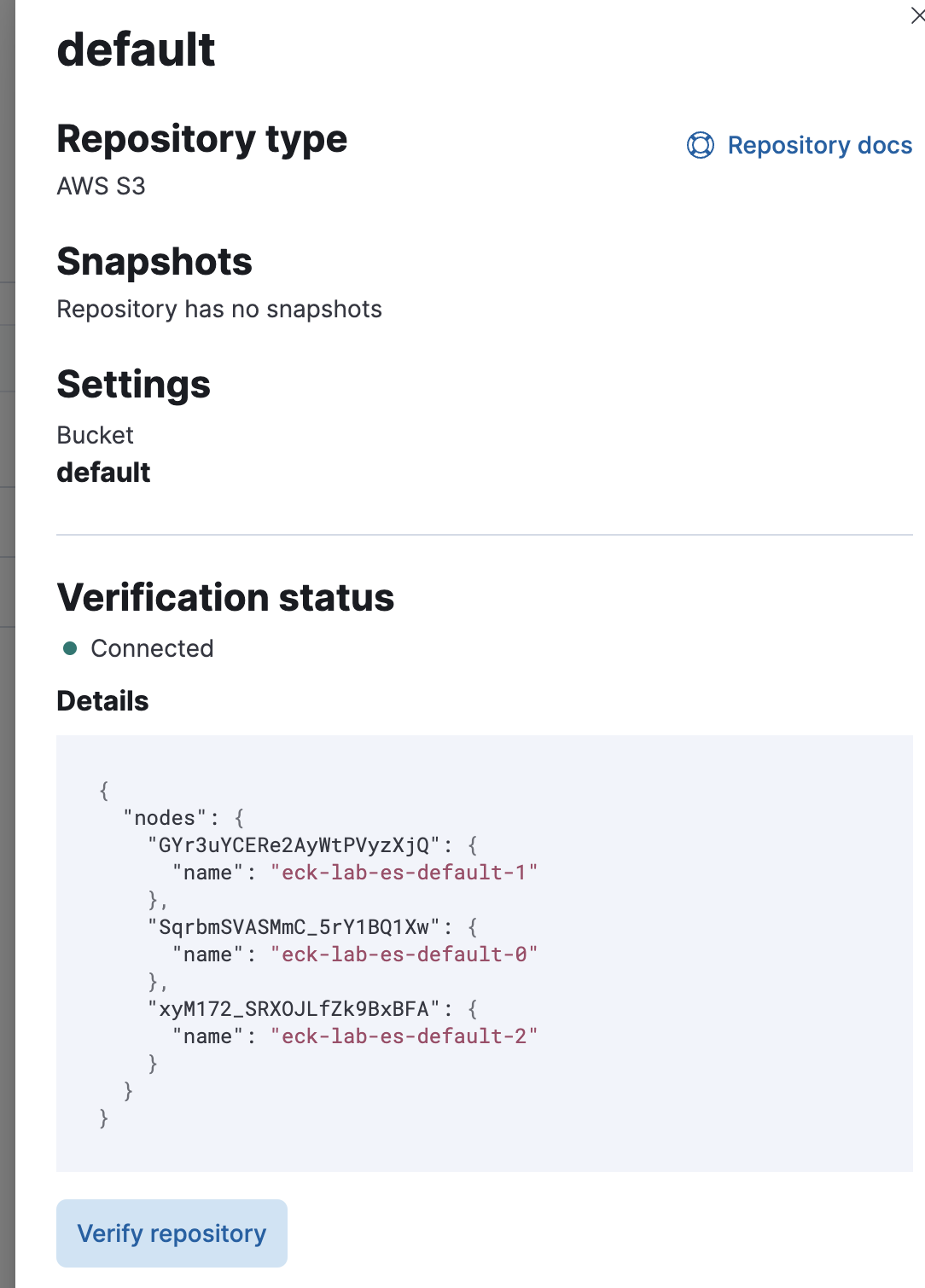

s3.client.default2.secret_keyCreate repo

- this can be done in devtools

POST _snapshot/default

{

"type": "s3",

"settings": {

"bucket": "default",

"path_style_access": true,

"endpoint": "https://minio-service.default.svc.cluster.local:9000"

}

}{

"acknowledged": true

}Test the repo

We can run _verify against it

POST /_snapshot/default/_verify{

"nodes": {

"GYr3uYCERe2AyWtPVyzXjQ": {

"name": "eck-lab-es-default-1"

},

"SqrbmSVASMmC_5rY1BQ1Xw": {

"name": "eck-lab-es-default-0"

},

"xyM172_SRXOJLfZk9BxBFA": {

"name": "eck-lab-es-default-2"

}

}

}Can be verified from Snapshot and Restore as well

You can add the default2 repostiory as well. It will use the same S3 server but will store its snapshots in the default2 bucvket instead.

Enjoy!!